EmotiBrush

EmotiBrush

Overview

Emoti-brush is a collaborative VR painting tool designed for interpersonal emotion expression.

![]()

Emoti-brush is a collaborative VR painting tool designed for interpersonal emotion expression.

Instructor

Harpreet Sareen

Class

Major studio I

Duration

October - December 2018

Tool

Unity, SteamVR, Arduino

Brief

It aims to arise emotional awareness and strengthen the interpersonal relationship. With this tool, the user can create a three-dimensional painting while the control of the brush is decoupled from their own expression to another person's feeling. We monitor muscle, heart rate, brainwave, and body temperature to create an emotion map. These parameters are mapped to brush strokes. It's a pair only painting tool which allows the user to paint with others emotion. This paper also describes the composition of the brush by analyzing the relationship between each element of brush and inner emotion with the database.

Harpreet Sareen

Class

Major studio I

Duration

October - December 2018

Tool

Unity, SteamVR, Arduino

Brief

It aims to arise emotional awareness and strengthen the interpersonal relationship. With this tool, the user can create a three-dimensional painting while the control of the brush is decoupled from their own expression to another person's feeling. We monitor muscle, heart rate, brainwave, and body temperature to create an emotion map. These parameters are mapped to brush strokes. It's a pair only painting tool which allows the user to paint with others emotion. This paper also describes the composition of the brush by analyzing the relationship between each element of brush and inner emotion with the database.

What if the emotion can be drew and shared with your intimate?

Emotio is how people presents in this world; it is how people feel and react to their experiences and condition. Understanding emotion is a way to identify and learn about oneself and others [1]. Human emotion has inseparable connection with human body which includes bio-signal, gesture, body temperature and other related aspects. Although emotion could be expressed verbally and physically, physiological signals which controlled by the autonomic nervous system are spontaneous and won’t be intervened [2].

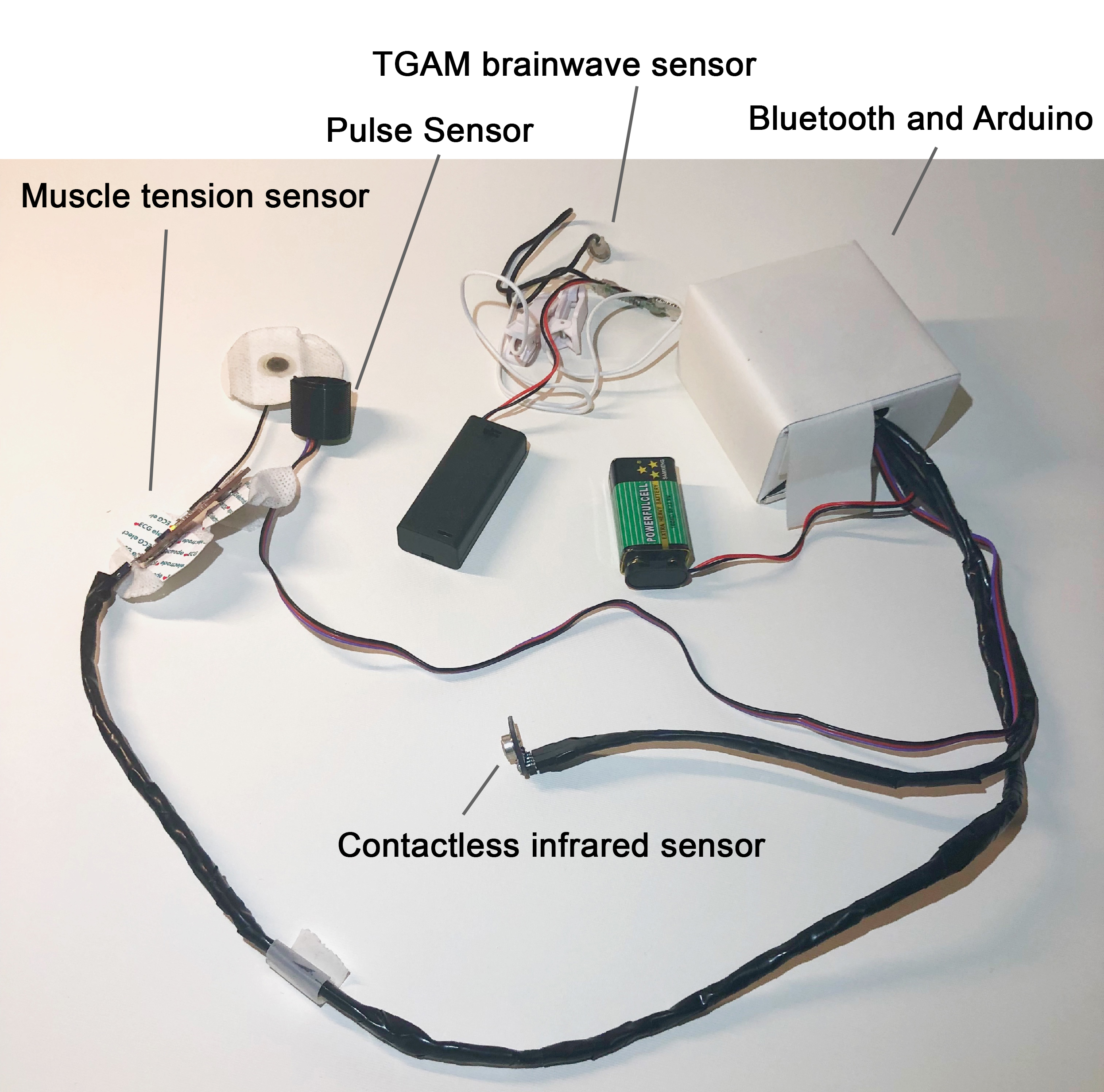

Set up

It is driven by HTC vive, powered by SteamVR and Windows 10 laptop computer with lowest GPU configuration - GTX 970. Figure (on the right side) displays four sensors users need to wear while they are painting. Figure 2 demonstrates the painting environment designed for user.

Design and implementation

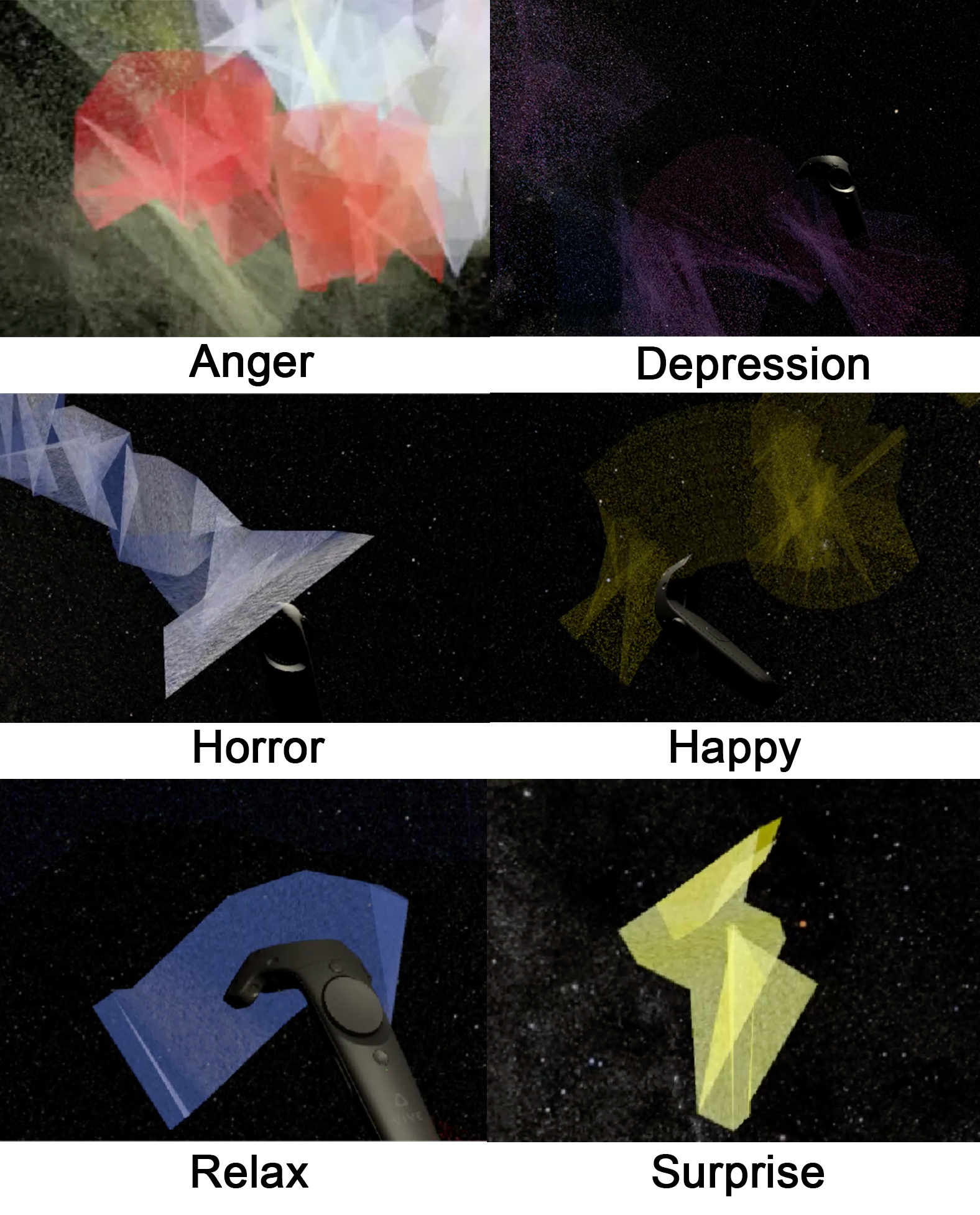

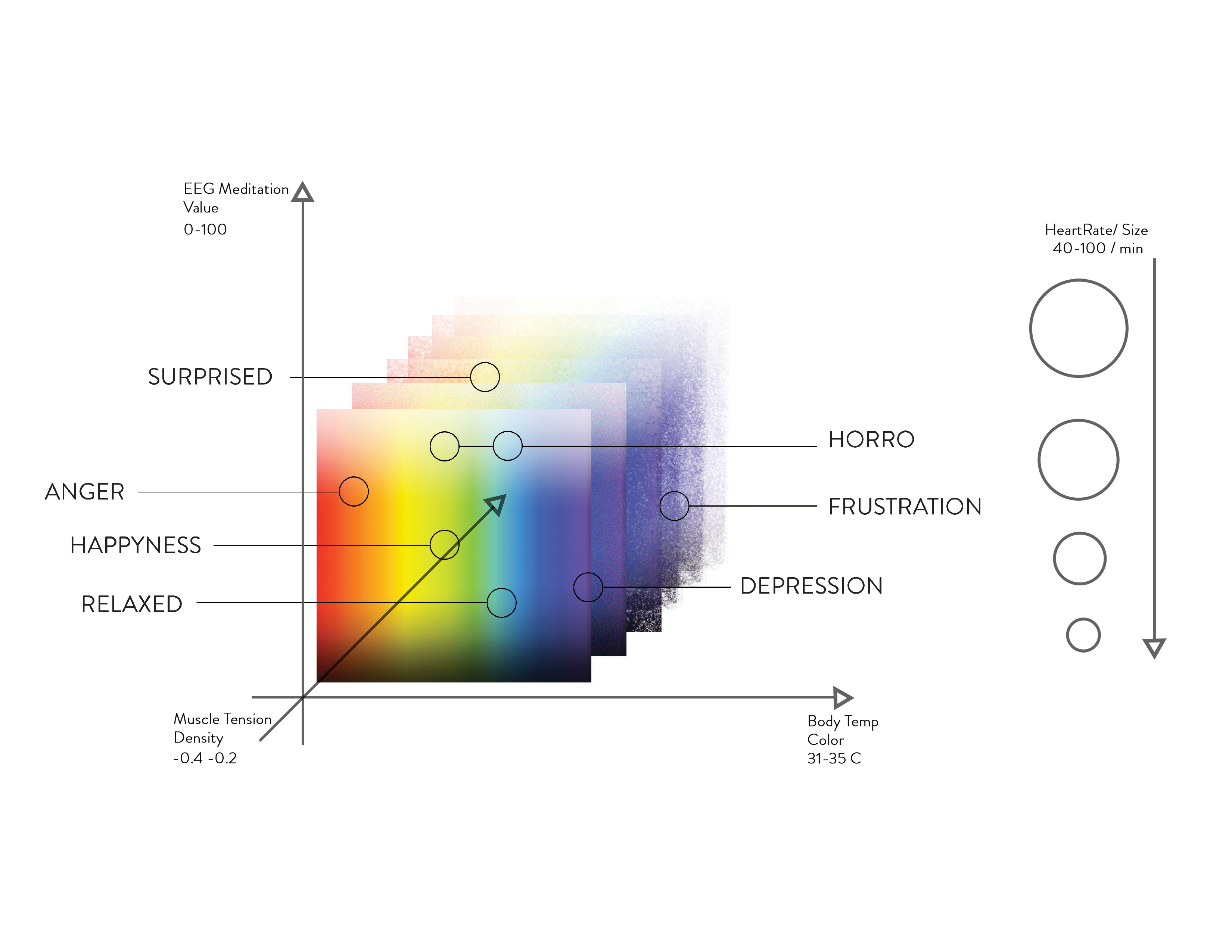

It is important to consider the composition of the brush itself that includes color, size, texture, value etc. We currently chose four variables that are automatically changed with the alteration of the data collected from the user.

We decided to monitor muscle, heart rate, brainwave and body temperature to create an emotion map because these four parameters are relatively easier to detect, and all of them are supported by a huge amount of research. Research results that reliable measurement of muscle tension of individual differences in incidental facial responses and to emotional expressions is feasible [1] ... Hue remaps with Skin Temperature because people always connect color, temperature and emotion. The size of the brush alters with the heartbeat. Figure 6 is the chart which shows the progress of generating different brushes.

Final Prototype

The target users are the people who have an

intimate relationship and enjoy painting since their intense bond and abundant

shared experience will create a stronger emotional connection. We did

preliminary qualitative testing with one friend pairs. They shared memory, either pain or happiness. The user claims

that sometimes they try to hide their emotion when they speak, but the brush

changes the color pretty obvious. Also, when the brush changes the style, they

talk more and try to understand each other more.

Reference

1. Künecke, Janina,

et al. “Facial EMG Responses to Emotional Expressions Are Related to Emotion

Perception Ability.” PLoS ONE, vol. 9, no. 1, 2014,

doi:10.1371/journal.pone.0084053.

2. Lee, C.k., et al. “Using Neural Network to Recognize Human Emotions from Heart Rate Variability and Skin Resistance.” 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Sept. 2005, doi:10.1109/iembs.2005.1615734.

3. Norman K. Denzin. 2007. On Understanding Emotion. Transaction Publishers, New Brunswick, NJ.

4. Marín-Morales, Javier, et al. “Affective Computing in Virtual Reality: Emotion Recognition from Brain and Heartbeat Dynamics Using Wearable Sensors.” Scientific Reports, vol. 8, no. 1, Dec. 2018, doi:10.1038/s41598-018-32063-4.

5. Nummenmaa L, Glerean E, Hari R, Hietanen JK. “Bodily maps of emotions” Proc Natl Acad Sci U S A. 2014 Jan 14; 111(2):646-51

6. Daniela Girardi, Filippo Lanubile, Nicole Novielli. 2017. Emotion Detection Using Noninvasive Low- Cost Sensors.

7. Arnd-Caddigan, Margaret. “Sherry Turkle: Alone Together: Why We Expect More from Technology and Less from Each Other.” Clinical Social Work Journal, vol. 43, no. 2, Apr. 2015, pp. 247–248., doi:10.1007/s10615-014-0511-4.

8. Cearreta, I., López, J.M., López de Ipiña, K., Garay, N., Hernández, C., Graña, M.: A study of the state of the art of affective computing in ambient intelligence environments. In: Interacción 2007 (VIII Congreso de Interacción Persona-Ordenador, II Congreso Espanol De Informatica - CEDI 2007), pp. 333–342. Asociación Interacción Persona- Ordenador (AIPO) (2007)

9. Scwoch Wioleta. 2013. Using physiological signals for emotion recognition.

2. Lee, C.k., et al. “Using Neural Network to Recognize Human Emotions from Heart Rate Variability and Skin Resistance.” 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Sept. 2005, doi:10.1109/iembs.2005.1615734.

3. Norman K. Denzin. 2007. On Understanding Emotion. Transaction Publishers, New Brunswick, NJ.

4. Marín-Morales, Javier, et al. “Affective Computing in Virtual Reality: Emotion Recognition from Brain and Heartbeat Dynamics Using Wearable Sensors.” Scientific Reports, vol. 8, no. 1, Dec. 2018, doi:10.1038/s41598-018-32063-4.

5. Nummenmaa L, Glerean E, Hari R, Hietanen JK. “Bodily maps of emotions” Proc Natl Acad Sci U S A. 2014 Jan 14; 111(2):646-51

6. Daniela Girardi, Filippo Lanubile, Nicole Novielli. 2017. Emotion Detection Using Noninvasive Low- Cost Sensors.

7. Arnd-Caddigan, Margaret. “Sherry Turkle: Alone Together: Why We Expect More from Technology and Less from Each Other.” Clinical Social Work Journal, vol. 43, no. 2, Apr. 2015, pp. 247–248., doi:10.1007/s10615-014-0511-4.

8. Cearreta, I., López, J.M., López de Ipiña, K., Garay, N., Hernández, C., Graña, M.: A study of the state of the art of affective computing in ambient intelligence environments. In: Interacción 2007 (VIII Congreso de Interacción Persona-Ordenador, II Congreso Espanol De Informatica - CEDI 2007), pp. 333–342. Asociación Interacción Persona- Ordenador (AIPO) (2007)

9. Scwoch Wioleta. 2013. Using physiological signals for emotion recognition.